Artificial intelligence-powered weapons have radically transformed the battlefield. But can, or should they also be made to follow ethical rules? Although many nations still practice the tried and tested military tactics and techniques of World War II vintage, major powers, particularly the US, China, Russia and Israel, are grappling with the moral, ethical, tactical and strategic implications of the growing use of artificial intelligence (AI) and autonomous weapons which are increasingly independent of human interventions.

The digital revolution is changing our world in incredible ways, challenging conventional wisdom, values and ethics which most of us took for granted.

And perhaps nowhere is this more obvious than on the battlefield.

Although many nations still practice the tried and tested military tactics and techniques of World War II vintage, major powers, particularly the US, China, Russia and Israel, are grappling with the moral, ethical, tactical and strategic implications of the growing use of Artificial Intelligence (AI) and autonomous weapons which are increasingly independent of human interventions.

“Autonomous weapons would lack the human judgment necessary to evaluate the proportionality of an attack, distinguish civilian from combatant, and abide by other core principles of the laws of war,” says Stop Killer Robots, an outfit working with military veterans, tech experts, scientists, roboticists, and civil society organisations around the world to ensure meaningful human control over the use of force.

“History shows their use would not be limited to certain circumstances. It’s unclear who, if anyone, could be held responsible for unlawful acts caused by an autonomous weapon – the programmer, manufacturer, commander, or machine itself – creating a dangerous accountability gap,” says the group.

The letter, signed by over 40,000 AI professionals so far, argues for a ban on such weapons, because “It will only be a matter of time until they appear on the black market and in the hands of terrorists…

Systems which do not require a human order to shoot are already in use. But they are mostly defensive in nature, and are programmed to shoot when a specific pre-determined line is crossed. These include the US ship-based MK15 Phalanx Close-in Weapon system, South Korea’s SGT-A1 armoured sentry bot, and Israel’s Iron Dome anti-missile system. But these are considered automatic, rather than autonomous.

Then there are other systems like unmanned aerial vehicles or drones, like the US MQ1 Predator and MQ9 Reaper which provide battlefield commanders with unprecedented situational awareness using powerful onboard sensors, can ‘loiter’, identify, target, and destroy (‘Find, Fix, Fire, Finish’) adversaries. They’ve been used to track terrorists in Yemen, Afghanistan and Pakistan, missiles in North Korea, and drug cartels in Mexico. But though they can identify, search and follow pre-defined targets, they can only use lethal force when a human operator gives the order. Theoretically, however, it requires just another line or two of code to remove the human from the loop completely.

Popular Sci Fi books and Hollywood blockbusters,in which Cyborgs and killer robots revolt against mankind,have led to legitimate as well as ludicrous fears not just among civil society but also a section of security analysts. They sanctimoniously point to English author Mary Shelly’s 1818 novel Frankenstein; or, The Modern Prometheus,as an example of what could go wrong. But most of these concerns revolve around the ability of AI enabled weapons (or even robots that are propounded to replace regular soldiers) to differentiate between friend and foe, the enemy and hapless civilians.

While there is no agreed international definition of lethal autonomous weapon systems (LAWS), a 2012 Department of Defense Directive, updated in 2020, defines LAWS as “weapon system[s] that, once activated, can select and engage targets without further intervention by a human operator,” also known as “human out of the loop” or “full autonomy.”

Other categories include human supervised, or “human on the loop,” autonomous weapon systems, which allows operators to monitor and halt a weapon’s target engagement, and the semi-autonomous, or “human in the loop,” weapon systems that “only engage individual targets or specific target groups that have been selected by a human operator.” These include “fire and forget” weapons like guided missiles which become autonomous after being programmed with specific targets.

…China’s leaders have made clear they intend to be globally dominant in AI by the year 2030.

While the directive excludes “autonomous or semiautonomous cyberspace systems for cyberspace operations; unarmed, unmanned platforms; unguided munitions; munitions manually guided by the operator (e.g., laser- or wire-guided munitions); mines; [and] unexploded explosive ordnance,” it mandates that all systems, including LAWS, be designed to “allow commanders and operators to exercise appropriate levels of human judgment over the use of force.” This basically means that humans must decide how, when, where, why and in what quantum these weapon scan be used, “with appropriate care and in accordance with the law of war, applicable treaties, weapon system safety rules, and applicable rules of engagement.” It also rules that any changes or upgrades to the system’s operating state would entail full re-testing and evaluation.

“Autonomous weapons select and engage targets without human intervention,” begins an open letter released in July 2015 by the International Joint Conference on Artificial Intelligence (IJCAI), an annual gathering of AI practitioners and researchers. “They might include, for example, armed quad copters that can search for and eliminate people meeting certain pre-defined criteria, but do not include cruise missiles or remotely piloted drones for which humans make all targeting decisions. AI technology has reached a point where the deployment of such systems is — practically if not legally — feasible within years, not decades, and the stakes are high: autonomous weapons have been described as the third revolution in warfare, after gunpowder and nuclear arms,” it said.

The letter, signed by over 40,000 AI professionals so far, argues for a ban on such weapons, because “It will only be a matter of time until they appear on the black market and in the hands of terrorists, dictators wishing to better control their populace, warlords wishing to perpetrate ethnic cleansing. Autonomous weapons are ideal for tasks such as assassinations, destabilizing nations, subduing populations and selectively killing a particular ethnic group. We therefore believe that a military AI arms race would not be beneficial for humanity. There are many ways in which AI can make battlefields safer for humans, especially civilians, without creating new tools for killing people.”

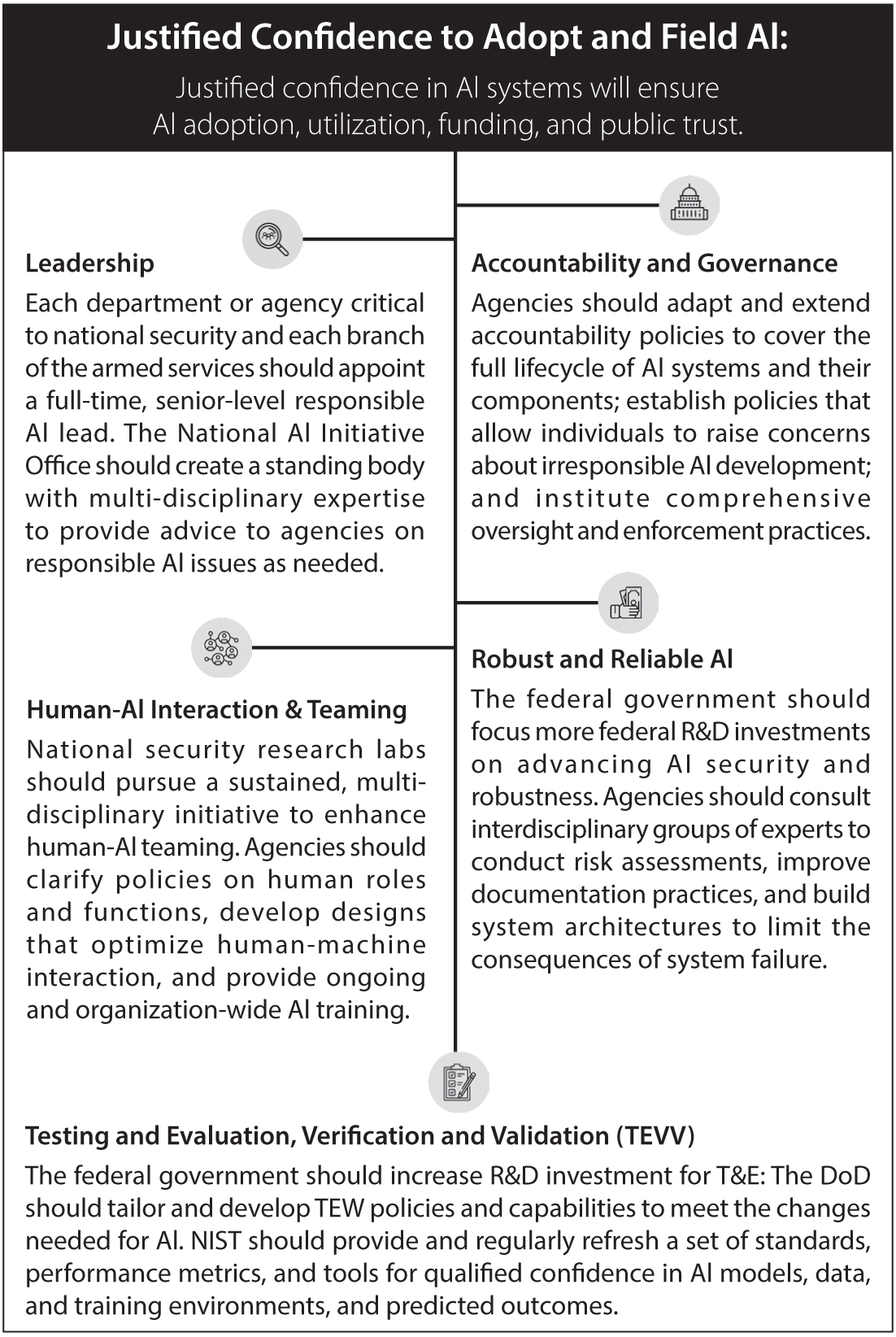

It was probably to address these fears that on 24 February 2020, the US Department of Defense released a number of guidelines meant to reassure citizens (and perhaps the rest of the ‘free’ world) that these all weapons using artificial intelligence would be designed and deployed in an ethical manner. Fundamentally, these guidelines revolve around: Responsibility, Equitability, Traceability, Reliability and Governability.

In July 2021, Speaking to the National Security Commission on Artificial Intelligence, US Secretary of Defense Austin said: “…our use of AI must reinforce our democratic values, protect our rights, ensure our safety and defend our privacy.” Asserting that over the next five years, the Department would invest nearly 1.5 billion USD to accelerate the adoption of AI, he pointed out that “obviously, we aren’t the only ones who understand the promise of AI. China’s leaders have made clear they intend to be globally dominant in AI by the year 2030. Beijing already talks about using AI for a range of missions, from surveillance to cyber attacks to autonomous weapons. In the AI realm as in many others, we understand that China is our pacing challenge.” But unlike China, “we have a principled approach to AI that anchors everything that this Department does. We call this Responsible AI, and it’s the only kind of AI that we do.”

The three main arguments in favour of the use of AI in war are:–

-

- Reduction in civilian casualties.

- Reduction in the need for military personnel.

- Reduction in overall harm and collateral damage due to precise targeting.

In fact, in a hot combat environment, AI might do a better job than humans.

At present, artificial intelligence technology with ‘deep learning’ algorithms as the core enables intelligent combat platforms to pass large-scale operations…

The United Nations Convention on Certain Conventional Weapons (CCW) has held meetings since 2014 to discuss the technological, military, legal, and ethical dimensions of “emerging technologies in the area of lethal autonomous weapon systems (LAWS)” and examine whether they will be capable of complying with International Humanitarian Law, and whether additional measures are necessary to ensure that humans maintain an appropriate degree of control over the use of force.

While it is possible to inject some level of ethics into AI-driven weapons, American commanders on the ground remain sceptical. Would these ‘ethical’ weapons not be at a distinct military (and economic, given that coding ethics for robots is expensive)disadvantage when enemy nations (like China and Russia) or even some well-funded terror outfit, deploy weapons which don’t have any such ethical issues? And when even regular human soldiers have trouble differentiating between civilians and terrorists who use them as human shields, how can you tweak artificial intelligence to do so without radically cutting down their efficiency? At the end of the day, the future soldier, whether assisted or replaced totally by robots with AI, must be able to take out threats before the threats eliminate them.

“Americans have not yet grappled with just how profoundly the AI revolution will impact our economy, national security, and welfare. Much remains to be learned about the power and limits of AI technologies. Nevertheless, big decisions need to be made now to accelerate AI innovation to benefit the United States and to defend against the malign uses of AI,” begins the 756 page ‘Final Report’ of the National Security Commission on Artificial Intelligence commissioned and tabled before the US Congress in March 2021.

“As a bipartisan commission of 15 technologists, national security professionals, business executives, and academic leaders, the National Security Commission on Artificial Intelligence (NSCAI) is delivering an uncomfortable message: America is not prepared to defend or compete in the AI era. This is the tough reality we must face,” it said.

Insisting that the US and its allies should reject calls for a global ban on AI-powered autonomous weapons systems, it says AI will “compress decision time frames” which humans cannot make quickly enough, and warns that Russia and China would be unlikely to honour any such treaty.

If autonomous weapons systems are properly tested and authorised for use by a human, they should be consistent with International Humanitarian Law, it says, urging that by 2025, “the Department of Defense and the Intelligence Community must be AI-ready.”

India, which faces a giant hostile neighbour to the north and its lapdog with nuclear weapons in the west, could certainly use some AI-powered weapons…

Urging Congress to get allies and partners on board to help, it says “China’s plans, resources, and progress should concern all Americans. It is an AI peer in many areas and an AI leader in some applications. We take seriously China’s ambition to surpass the United States as the world’s AI leader within a decade.”

Noting that the commission engaged with hundreds of representatives from the private sector, academia, civil society, and across the government, as well as “anyone who thinks about AI, works with AI, and develops AI who was willing to make time for us,” the NSCAI executive director YllBajraktari said: “We found consensus among nearly all of our partners on three points: the conviction that AI is an enormously powerful technology, acknowledgement of the urgency to invest more in AI innovation, and responsibility to develop and use AI guided by democratic principles. We also talked to our allies —old and new. From New Delhi to Tel Aviv to London, there was a willingness and desire to work with the United States to deepen cooperation on AI.”

Describing it as “a shocking and frightening report that could lead to the proliferation of AI weapons making decisions about who to kill,” Prof Noel Sharkey, spokesman for the Campaign To Stop Killer Robots said if implemented, the recommendations of the NSCAI “will lead to grave violations of international law.”

Chinks in the Bamboo Curtain

While the consensus in western military circles appears to be that the Peoples’ Liberation Army (PLA) will have no ethical or moral compulsions in its race to become the undisputable leader in AI by 2030, it is perhaps pertinent to refer to an article titled ‘Random Discussion on Legal Issues of Intelligent Warfare’ in Guangming Net-Guangming Daily, dated 20 July 2019, which almost mirrors the concerns raised by civil society in the west.

“Mahatma Gandhi once said: There are seven things that can destroy us, and’ science without humanity’ is one of them, says the author, Liang Jie, Associate Professor, Department of Military Judicial Work, School of Political Science, National Defense University, Beijing.

“At present, artificial intelligence technology with ‘deep learning’ algorithms as the core enables intelligent combat platforms to pass large-scale operations. Data analysis and learning to understand human combat needs, and directly participate in combat operations as a creative partner. It can obtain information, judge the situation, make decisions, and deal with situations relatively independently on the battlefield, and gradually shift from assisting human combat to replacing human combat,” he says. But “while exclaiming that ‘the era of unmanned warfare is coming,’ people cannot help worrying about whether human control will be lost in future wars. We should clearly realize that no matter how intelligent military technology develops, it should not change the ultimate decisive role of man in war. After all, technology is a tool for mankind to achieve combat goals and should not be separated from human control.”

Consequently, Chinese scientists and engineers released a code of ethics for artificial intelligence which seem remarkably similar to those being framed by the west. The Beijing AI Principles, released in May 2019 by the Beijing Academy of Artificial Intelligence (BAAI), an organization backed by the Chinese Ministry of Science and Technology and the Beijing municipal government, spell out guiding principles for research and development in AI, including that “human privacy, dignity, freedom, autonomy, and rights should be sufficiently respected.”

While it is true that Liang’s article and the principles could just be an attempt by Beijing to show that it is aligned with international humanitarian law, even as it goes ahead on war footing to develop weapons that do not necessarily comply with these laws, the very fact that such an article was run is significant, because it shows that the Chinese are aware of the complex issues and public sentiment involved.

Moscow Mule

Similarly, the argument that Russia has no ethical framework for the use of artificial intelligence in war seems presumptuous, although again, whether Moscow will walk its talk remains unclear.

“While Russia is not a leader in commercial and academic AI research — as the United States and China are… The Russian military seeks to be a leader in weaponizing AI technology,” Lt Gen Michael Groen, director of the Pentagon’s Joint Artificial Intelligence Centre, told National Defense.

While pushing for extensive research and development for AI-powered weapons since they are essential for Russia’s security and growth, President Vladimir Putin also agrees that it could be dangerous, and requires internationally accepted restrictions. In his speech at the UNGA in September last year, he urged the members to both encourage the use of AI and seek regulations that balance military and technological security with traditions, law and morality. A year earlier, Russian Security Council Secretary Nikolai Patrushev sought a comprehensive international regulatory framework “as quickly as possible” to keep AI from undermining national and international security. But while endorsing ‘meaningful human control’ over Lethal Autonomous Weapons Systems, Russia also argues that the UN call to regulate and limit LAWS is ahead of its time, because apparently AI is not yet ready to drive truly autonomous weapons.

Yet in August 2021, Russian Prime Minister Mikhail Mishustin approved the “Concept for the development of regulation of relations in AI technologies and robotics until 2024,” which calls for new regulatory norms for the ethical development and deployment of AI and robotics. Developers are urged to ensure that AI should be based on basic ethical standards, and make a “reasonable assessment” of risk to human life and health and comply with state laws and safety requirements.

The Israeli connection

When the Israeli-Palestinian conflict flared up once more in May this year, the Israeli Defense Forces (IDF) responded to rocket attacks on Jerusalem by launching air strikes that destroyed thousands of Hamas and Palestinian Islamic Jihad (PIJ) targets in the Gaza strip.

But this time, there was something different: The operation, dubbed “Guardian of the Walls” – relied on intelligence, both human and artificial. And while minimising civilian casualties, it eliminated most of Hamas and PIJ’s rocket manufacturing facilities; command and control sites, weapon warehouses and rocket stocks. It also sank Hamas’s Naval capabilities, blocked underground tunnels from Gaza into bordering Israeli villages and ‘neutralized’ several high-level commanders in both organizations. Here’s what AviKalo, who leads Frost and Sullivan’s Defense consulting branch in Israel, says in a report:–

“…the explosion of data and its widespread availability has been motivating IDI to evaluate AI-powered applications that streamline and analyze data, while strengthening military operational effectiveness. Hence, Israel’s recent Operation “Guardian of the Walls” will go down in military history as the first military campaign where human-machine relations were really put to the test.

“Indeed, these highly sophisticated and unprecedented AI approaches are transforming all that we know about military CONOPS in the modern battlefield. Israel has been a frontrunner in this regard and armed forces across the world are set to steer a strategic course towards acquiring these game-changing capabilities. This is also the moment for commercial and military intelligence communities to seize the business opportunities that this presents as part of the ever-changing scenarios inherent in modern, asymmetric warfare.”

Israel has established itself as a pioneer of autonomous weapons, like the Harop ‘Suicide Drone’, which can autonomously attack any target which meets its presetcriteria, but has a ‘man-in-the-loop’ facility to let a human intervene if needed.

Then there’s Israeli Border Control Sentry-Tech turret deployed along Gaza’s border, aimed at stopping Palestinians from entering Israeli territory. Automated ‘Robo-Snipers’ using heavy duty 7.62 calibre machine guns and explosive rockets create “automated kill-zones” at least 1.5 km deep. If any turret detects human movement, the entire chain of guns can concentrate firepower on the interloper. To increase its effectiveness, its automation consumes information provided by a larger network of drones and ground sensors spanning a 60 kilometer border. But Rafael, Sentry-Tech’s manufacturer, insists that a human operator still has to give the decision to fire.

India, which faces a giant hostile neighbour to the north and its lapdog with nuclear weapons in the west, could certainly use some AI-powered weapons, for operations ranging from border patrols to protecting military assets as well as space. And just like the US, Russia and China and Israel, it should pay lip service to demands for international legislation governing their use until it has its own inventory of such weapons. While talks are on to acquire the Reaper from the US, and an indigenous missile-carrying drone is being tested, it is important to understand that just a few such weapons are useless. For them to have real impact, they need to be integrated into the nation’s military doctrine and strategy. And that takes time, effort and both political and military will.

The debate over whether automated weapons distinguish between soldiers or civilians, or be held responsible for ‘mistakes’, or even whether weapons need ethics, absolving human decision-makers of responsibility, can continue.

Because regardless of the arguments for and against AI-enabled weapons, the fact remains that they are already in use, and will become increasingly more powerful, with or without ethics. And those without it are unlikely to find a place on the world’s top table anytime soon.

References

-

https://web.archive.org/web/20200215011803/http://news.gmw.cn/2019-07/20/content_33013497.htm),

-

https://www.baai.ac.cn/news/beijing-ai-principles-en.html