Early in January 1942, Project X-Ray of the United States of America (USA) envisaged using the Mexican Bat, endemic to South Western USA, as a retaliatory weapon against the Japanese for the attack on Pearl Harbour. The plan envisaged attaching incendiary bombs to the bats and releasing them from cluster containers, programmed to open at an altitude of 1,000 feet. These armed bats would roost on buildings, which were largely made from wood and other flammable material, causing widespread fires when the incendiaries exploded. While the plan was later cancelled in the midst of ongoing work on the Atomic Bomb, Project X-Ray was an inimitable example of an attempt to field cost-effective, lethal and autonomous weapons in modern-day warfare.

On November 12, 2017, a YouTube video released by the Future of Life Institute (a US-based non-profit organisation), depicted the existential dangers posed by ‘Slaughterbots’, mini autonomous drones that use Artificial Intelligence (AI) to carry out lethal, targeted anti-personnel strikes. The video declares the obsolescence of nuclear weapons in the face of this even more potent and looming threat and prophesizes the use of such Slaughterbots by State/Non-State actors to achieve National/political/ideological objectives by way of mass exterminations! Four years later, a sequel, titled ‘Slaughterbots – if human: kill’ was released, reiterating the danger of autonomous weapons and advocating their ban at a time when massive strides were being made in AI and in the development of Lethal Autonomous Weapon Systems (LAWS).

A year earlier, the International Committee of the Red Cross (ICRC), in its third Convention on Certain Conventional Weapons (CCW) meeting held in Geneva in April 2016,defined LAWS as ‘an umbrella term encompassing any weapon system that has autonomy in the critical functions of selecting and attacking targets’. Citing this broad classification of LAWS, ICRC goes on to state that ‘the advantage of such a broad definition is to ‘facilitate the process of determining the boundaries of what is acceptable under IHL and the dictates of public conscience1.

While opinions on the use of LAWS swing between ethical/existential imbroglios and their use as battle-winning Force Multipliers (FM), it would be worthwhile to weigh the advantages of their employment against the foreseeable threats that would emanate from their accidental/deliberate uncontrolled use.

LAWS AS FM

The 21st century is witness to the paradigm of Unmanned Systems. Weapon platforms manoeuvering through a pan-domain battle space to carry out remote tasks without risk to human life have captured the imagination of the Armed Forces and Defence Industry players alike. Instances wherein an unmanned platform is capable of augmenting the operational efficiency/lethality of a manned system and thus reducing the risk of human casualties, is now a reality amongst Armed Forces around the globe. While most instances of such collaboration involve slaved platforms, the global defence arena is already seeing the advent of situationally-aware, AI-enabled autonomous systems capable of independent operations.

CATS System Mounted on TEJAS LCA at Aero India Air Show in 2021: 1-CW, 2-ALFA-S, 3-CH (Source-infotonline.com)

In the Aerospace domain, the concept of the Unmanned Wingman (UW) – an Unmanned Aerial System (UAS) capable of supporting/augmenting the operations of manned aircraft in a contested aerial environment is set to revolutionise air operations. Such UW could be either teamed with manned platforms with different levels of operability or be AI-enabled and capable of autonomous operation. Last year, the Pentagon awarded a $93 million contract to General Atomics Aeronautical Systems Inc to equip the MQ-9 Reaper Unmanned Combat Aerial Vehicle (UCAV) with AI technology to enable autonomous operation and targeting, even in the case of loss of communications/GPS inputs. As a subset of global advancement, India has entered this fray with the Combat Air Teaming System (CATS), developed by Hindustan Aeronautics Limited, with collaboration. The system configuration includes a manned aircraft or ‘mother ship’, presently the Tejas Light Combat Aircraft (LCA) and stealth-enabled UW with surveillance/weapons payload, deployed individually or in swarms and capable of inter-operability and autonomous operation. These would include the CATS-Warrior (CW) and the CATS-Hunter (CH). The CW is an autonomous armed UAS which can launch up to 24 Air-Launched Flexible Asset (ALFA)-Swarm (S) Drones, each in turn capable of autonomous target acquisition and lock-on. The CH is a stand-off, Air-Launched Cruise Missile (ALCM) capable of autonomous target acquisition and attack.

India has also recently flight-tested the Stealth Wing Flying Test-bed (SWiFT), a fully autonomous technology demonstrator and precursor to the Ghatak – a stealthy, AI-enabled UCAV, projected to be largely capable of human-independent operation. An illustration of tri-domain launched autonomous LAWS is the ‘intelligent’ autonomous Loitering Munitions (LM) like Israeli Aerospace Industry’s Harop Kamikaze Drone, used successfully by Azerbaijani forces in the Nagorno-Karabakh conflict to autonomously destroy command posts and troop carriers. The Indian Air Force operates these drones. Worryingly, it is reported that some non-state actors have modified commercially available drones into improvised LM!

In the maritime domain, Maritime Unmanned Systems (MUS) include all unmanned surface/sub-surface vessels with necessary onboard payload/infrastructure to remotely accomplish assigned ISR/strike missions, either under positive control from a manned station or autonomously. The NATO Factsheet of October 20212 identifies MUS tasks, to include Maritime Domain Awareness (MDA), monitoring/protection of Sea Lines of Communication, mine detection and clearance as well as detecting and tracking hostile vessels. These can also include strike missions against hostile maritime/shore-based targets- all achieved with minimal risk to human life and at an iota of the operational cost! The Defense Advanced Projects Agency (DARPA) of the USA launched the Sea Hunter Autonomous Anti-Submarine MUS in 2016. In 2018-2019, this MUS autonomously navigated over an 8,300-km swathe of the North Pacific Ocean, thus demonstrating undeniable capability for long-distance navigation, which could allow remote expeditionary ISR/strike missions around the globe!

China has inducted the JARI MUS into the People’s Liberation Army (PLA) Navy in 2019. The patrol craft has a range of close to 1,000km, giving it significant reach across the South China Sea. It is equipped with a 3D Active-Phased-Array Radar and mast-mounted Electro-Optical (EO) surveillance systems for 360º coverage and target detection. The MUS mounts a 30mm remote-controlled cannon and can be fitted with SONAR and torpedo tubes, for surface, sub-surface and aerial engagements. The MUS would also be capable of operating in ‘clusters’ against multiple targets.

Land Warfare is similarly witnessing the development of tactical Unmanned Ground Vehicles (UGV) – autonomous ground-based platforms with multiple/flexible payloads to carry out tactical combat and Combat Support (CS) missions. While the Soviet Union and Germany had developed remotely-operated ground vehicles prior to/during World War II, the USA’s DARPA, as part of the Strategic Computing Initiative for research into Advanced Robotics and AI, demonstrated the Autonomous Land Vehicle in 1985 – the first UGV capable of complete autonomous navigation. It would be apt to state that since combatant attrition is highest in land-based operations, the impetus amongst ground forces to incorporate UGVs into tactical planning for ISR, combat or CS missions is understandably high.

An example of an autonomous UGV is Estonia’s Tracked Hybrid Modular Infantry System (The MIS), an armed multi-mission UGV designed for direct-fire support, ISR and CS missions. The UGV’s autonomous control system manages navigation, obstacle avoidance and location/event-specific behaviour, thanks to its deep-learning algorithm and neural networks. The MIS mounts a remote controlled weapon station and can be equipped with a 30mm cannon, machine-guns or guided missile/autonomous LM launchers. The MIS is presently operated by ten European Union (EU) countries and the USA and is operationally deployed in Operation Barkhane, the ongoing anti-insurgent operation in Sub-Saharan Africa!

India’s DefExpo 2020 saw a Chennai-based entrepreneur field ‘Sooran’, an armoured, multi-terrain UGV with a mounted gun-turret and ISR payload. The UGV is AI-enabled and functions remotely or autonomously, making it ideally suited for low-intensity tactical operations. As applied to full-spectrum land operations, such autonomous UGVs could function individually or in ‘herds’ (ground equivalent of ‘swarms’) to overwhelm an adversary in tactical operations.

In a move that aims to build pan-domain synergy between autonomous UAS and UGV operations, researchers at the US’ Army Combat Capabilities Development Command (DEVCOM) are developing technology that will allow autonomous UAS to independently land on and recharge from a moving UGV, thus enhancing operational reach, without the aid of GPS or inter-communication between the two platforms.

In addition to the above, Israel became the first country to use AI-enabled autonomous ‘drone-swarms’ in actual combat in May-June 2021, to neutralise Hamas terrorists in Gaza, with no human input! The Russian Molniya, French Icarus and UK’s Blue Beardrone swarms display similar technology. The Indian Army, as an exhibition of disruptive technology, demonstrated precision targeting by a 75-strong autonomous drone-swarm during the Army Day Parade in 2021. A year later, during the Beating Retreat Ceremony in New Delhi on January 29, 2022, an awe-inspiring 1000-strong drone-swarm exhibition was put on by an Indian start-up under the ‘Make in India’ initiative, thus demonstrating India’s prowess in the field of drone-swarm technology, which could quickly be put to military use, if desired.

Globally, as part of a collective vision for ‘NATO 2030’, an agreement was reached at the 2021 NATO Summit in Brussels to launch the Defence Innovation Accelerator for the North Atlantic (DIANA)3, NATO’s DARPA counterpart, which would strive, among other aims, to provide impetus to NATO’s AI Strategy, aimed at meeting operational requirements, including support for autonomous military operations.

It is thus evident that LAWS in any of the forms elucidated above, represent a revolutionary paradigm in pan-domain war fighting that would surpass the efficiency of manned missions, with greater lethality, lesser numbers per mission, quicker responsiveness due to shortened decision/data-processing loops and with none of the associated shortcomings of fatigue, psychosis or threat to human life. Apropos, AI-enabled LAWS would prove to be indisputable FMs for the Armed Forces in full-scale or low-intensity conflict scenarios and are bound to find their way into global military inventories in ever-increasing numbers in the years ahead.

Do LAWS Present an Ethical Quandary or an Existential Menace?

In order to arrive at an inference in this regard, it would be prudent to examine the acceptability of LAWS against the backdrop of the statutes of the Laws of Armed Conflict (LOAC), under the larger umbrella of International Humanitarian Law (IHL). It is important to understand that LOAC, established to regulate the conduct of armed hostilities, apply equally to inter-State conflict (War) as also to internal strife, which could take the form of a local insurgency, externally sponsored anti-National actions or civil war. Apropos, LOAC will cover all possible scenarios in which LAWS can be employed. However, what must be borne in mind is that LOAC are neither recognised nor respected by non-state actors and, therefore, strict regulations in development and proliferation rather than mere statutes would be imperative to keep such weapons out of irresponsible hands!

LOAC: The following principles of LOAC4 merit examination in the context of the use of LAWS:–

Distinction – It is imperative to distinguish between combatants involved in hostilities, those injured or who have surrendered and the civilian population; the latter two considered immune from hostile action under LOAC. A further dilemma that accrues in the use of LAWS is the fact that combatants could shift rapidly between categories mentioned. Equally, civilians may also be part of hostilities in various capacities. This brings about the associated conundrum of accountability wherein, responsibility for a LOAC violation would not be easy to attribute, since a number of stakeholders, from software programmers to commanders in the field, contribute to the actions of a LAWS.

Proportionality, Military Necessity, Limitation and Caution – LOAC prohibits the use of excessive force and imposes an obligation on conflicting sides to take actions to minimise incidental casualties/collateral damage, which must not exceed the effort required to achieve military objectives. This necessitates detailed military planning and acuity of judgement during battle. Since conflict situations are amorphous and unpredictable at best, views are skeptical on whether AI, however refined, can adapt prudently to contingencies that would arise once hostilities are joined. A UN Report of March 2021 on the Libyan Civil War observed the engagement of rival retreating troops by the interim Libyan Government using autonomous LM, including the Turkish Kargu-2. These LAWS were “programmed to attack targets without requiring data connectivity between the operator and the munition. In effect, a true ‘fire, forget and find’ capability”5. Such de-controlled engagements can, for the most part, be deemed provocative and avoidable. Indeed, the larger acceptability of loss of a LAWS as compared to human life, could lower conflict thresholds and make opposing sides ‘trigger-happy’, leading to rapid and undesirable escalation.

Good Faith, Humane Treatment and Non-Discrimination – It is incumbent upon opposing sides to show good faith in judging each other’s actions. Combatants and civilians must be treated humanely, without prejudice to background, involvement or creed. Propriety of action, moral responsibility and sagacity for human dignity are essential attributes of an intuitive human participant in hostilities, which allow him/her to bring to mind the aforementioned principles of LOAC before inflicting lethal harm. These attributes are absent in a LAWS platform, regardless of sophistication. In fact, enhancing levels of sophistication and autonomy of LAWS might prove counterproductive, since these would increase the unpredictability of its actions brought on by deep-learning, innate algorithms.

Reciprocity – Violation of LOAC by one side does not justify non-adherence by the other. Since AI-driven LAWS would react based on circumstantial inputs, unbridled hostile actions by one side would not invite desired restraint from an adversary’s LAWS, in the absence of a sense of responsibility for adherence to these statutes.

Why are LAWS Likely to Create Ethical Dilemmas?

The advancements in AI/intuitive machine-learning would lend to greater unpredictability of LAWS due to the plethora of options generated. This runs the risk of the ‘means becoming the ends’, with AI-enabled LAWS actually looking for soft-kills that may or may not contribute directly to the war effort. In the absence of fatigue, LAWS can be employed for protracted periods, thus increasing the interface for unpredictable/irresponsible lethal actions. With greater autonomy and in the absence of human control in its decision-loop, it would become increasingly difficult to intervene or deactivate a LAWS that is carrying out actions in violation of the LOAC.

Do LAWS Pose an Existential Risk?

It would be prudent to commence this argument with an accepted definition of existential risk- “any risk that has the potential to eliminate all of humanity or, at the very least, kill large swaths of the global population, leaving the survivors without sufficient means to rebuild society to current standards of living”6. As extrapolated to the risk posed by AI, “…if the AI technology is not developed safely, there is also a chance that someone could accidentally or intentionally unleash an AI system that ultimately causes the elimination of humanity”7. Against realistic scrutiny, the graduation or deterioration of AI-enabled LAWS to a point where such systems collaborate intelligently to pose an existential threat, in a global ‘drone-swarm’ Armageddon, may presently be in the realm of fiction, but is albeit still a clear and present danger, if the issues of concern are not addressed properly.

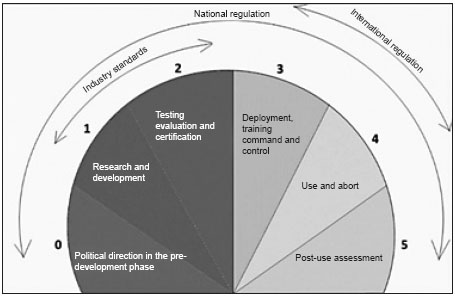

It is, therefore, imperative to urgently institute carefully deliberated statutes and strictures that would allow positive control over the ‘behaviour’ of AI-enabled weapons and prevent the misuse of LAWS. Global censures governing the use of autonomous/lethal weapons are not new. The 2016 Review Conference of CCW on LAWS saw the Group of Governmental Experts (GGE) agree to establish a mandate for the use of LAWS. The Guiding Principles drawn up by the GGE governing the use of LAWS were endorsed by the CCW in 20198, in the presence of 83 participant Nations, including India and four observer countries as also by the ICRC9. Some of these are discussed below:–

-

- Human-Machine Interaction (HMI) in LAWS MUST be retained to ensure the use of LAWS within the statutes of IHL. The quality and extent of HMI must be governed by factors such as operational context and weapon capabilities, in order to secure the ability to modify mission parameters or deactivate the weapon system, if required. States/parties to armed conflict must ensure individual responsibility for the employment of LAWS in accordance with obligations under IHL.

- Requirements of IHL and LOAC including the principles of Distinction, Proportionality and Caution must be applied through a responsible command and control chain of human operators/commanders.

- LAWS MUST NOT be used if inherently indiscriminate/incapable of adherence to IHL/LOAC, nor must it be used to cause superfluous injury/unnecessary suffering.

- States must continuously conduct Legal Reviews associated with the study, development, acquisition or employment of LAWS.

- Shared understanding MUST be developed on the role of operational constraints in tasking, target profiles, time-frame of operation and operating environment associated with use of LAWS. It is widely opined that use of LAWS against human targets be prohibited, which would in turn discourage the proliferation of ‘slaughterbot’ – like anti-personnel drone-swarm technologies.

- Scrutiny and proscription of AI hazards associated with LAWS, to include- possible bias in algorithms, immunity from intervention and capability forself-adaptation/self-initiation. The degree of unpredictability that can be introduced in LAWS must be quantified and regulated to obviate the propensity of an AI-enabled LAWS to run rogue.

- Risk mitigation measures MUST be incorporated during design, development, testing and deployment of LAWS, to include-development of and adherence to doctrine/procedures, rigorous testing/evaluation, legal reviews, training and appropriate/restrictive rules of engagement.

Conclusion

In a world in which the Armed Forces and parties to conflict will increasingly seek to leverage the obvious advantages of LAWS in prosecuting operations at all levels of conflict, it is incumbent upon designers, developers, manufacturers and users of LAWS as well as governing bodies to establish fool-proof and future-ready safeguards in design and scope of employment of such weapon systems. Only then can we rest in the assurance that such potent FMs will not trigger an ‘arms-race’ and contribute to a global crisis featuring man vs machine in the years ahead.

Endnotes

- https://www.icrc.org/en/document/statement-icrc-lethal-autonomous-weapons-systems

- http://www.nato.int/factsheets

- https://finabel.org/defence-innovation-accelerator-for-the-north-atlantic-diana/

- www.icrc.org/en/doc/assets/files/other/intro_final.pdf

- https://documents-dds-ny.un.org/doc/UNDOC/GEN/N21/037/72/PDF/N2103772.pdf

- https://futureoflife.org/background/existential-risk/

- https://futureoflife.org/background/existential-risk/

- https://documents-dds-ny.un.org/doc/UNDOC/GEN/G19/285/69/PDF/G1928569.pdf

- https://www.icrc.org/en/document/icrc-position-autonomous-weapon-systems